INESC-ID Achieves 9x Acceleration for Epistasis Disease Detection using oneAPI Tools and Intel Hardware

Summary

Lisbon-based INESC-ID is one of the largest R&D +I (Research, Development and Innovation) organizations in Portugal. Defining scientific-based technology since 1999, its research focuses on 11 areas— high performance computing and architectures, AI, human language technologies, distributed parallel & secure systems, and seven more—and promotes cooperation between academia and industry. It is partially owned by Instituto Superior Técnico (IST), University of Lisbon, which is one of the top high-education schools of engineering, science and technology in Portugal.

To quickly and accurately detect yet-to-be-discovered combinations of gene mutations that contribute to complex conditions and diseases, INESC-ID used the oneAPI Base and HPC toolkits on Intel® CPUs and GPUs, and in particular the Cache Aware Roofline Model (CARM) feature of Intel® Advisor, to boost its epistasis detection approaches.

The results:

- Close to 9x acceleration using the CARM of Intel® Advisor compared to the baseline implementation

- Up to 14% performance improvement using Intel® Data Center GPU Max Series vs NVIDIA A100*

- Up to 52% average improvement on Intel® Xeon® CPU Max Series 9480 platform over the previous generation of Intel CPUs

Using HPC to Uncover Disease Markers

The High-Performance Computing Architectures and Systems (HPCAS) Research Group at INESC-ID works on state-of-the-art topics in HPC, performance modeling, and bioinformatics, including epistasis detection—detecting combinations of specific gene mutations that can lead to a more likely expression of a disease and adverse effects on health (e.g., Alzheimer’s disease or breast cancer). Besides Aleksandar Ilic and Ricardo Nobre, the research team at HPCAS (including Diogo Marques, Rafael Campos, Miguel Graca, Sergio Santander-Jimenez and Leonel Sousa), has been working on optimizing this type of bioinformatics applications within several national and international EU research projects.

The goal: to facilitate and accelerate the search for previously unknown genotype-to-phenotype associations, which can have practical applications in personalized medicine and pharmacogenetics.

Challenge: Enormous Datasets vs Bounded Compute Resources

Epistasis detection employing an extensive search of the multiple gene interactions provides the most reliable way to identify genetic root causes accurately and facilitate personalized treatment and preventive solutions. But it’s a very computationally and memory-intensive task:

- The datasets used in epistasis detection often represent genetic information for thousands (or even millions) of genetic markers (single nucleotide polymorphisms, SNPs).

- They can contain tens or even hundreds of thousands of samples.

- This makes epistasis detection searches very computationally challenging, especially when processing higher-order combinations, i.e., sets of three or more SNPs (e.g., for 100000 SNPs, around 166.7 trillion SNP triples need to be evaluated).

- In addition, depending on the algorithms and optimizations used, search performance can be impacted by limitations of the compute platforms’ memory subsystem in terms of capacity and bandwidth for the different cache/memory levels.

Designing an Experiment to Meet the Challenge

With the goal of completing a genomic scan as quickly and efficiently as possible, the HPCAS research group proposed using heterogeneous computer architectures composed of multicore CPUs and GPUs to achieve performant and energy-efficient epistasis analysis. Specifically important for application optimization is the Cache-aware Roofline Model (CARM), one of their research contributions, which is fully integrated in the Intel® Advisor. It gives a high-level picture of the fundamental compute or memory limitation for the given compute architecture, while it also provides intuitive analysis of the application potential execution bottlenecks and effectively guide the optimization efforts.

Being a highly data-parallel application, a plethora of approaches have been proposed in literature, targeting CPUs, GPUs, and specialized accelerators deployed in FPGAs. Unfortunately, even for general-purpose CPUs, most existing solutions neither fully exploit the available hardware features in modern micro-architectures nor offer the insights necessary to efficiently use compute and memory resources.

So the team looked at Intel hardware and software to provide the necessary optimizations.

Solution: Using Intel® CPUs, GPUs, and oneAPI Software

Specifically, HPCAS used the following:

- Intel® oneAPI Tools, using the CARM feature in Intel® Advisor to guide the optimization process.

- 3rd and 4th Gen Intel® Xeon® Scalable processors to take advantage of new hardware features such as the Intel® Advanced vector Extensions 512 (Intel® AVX-512) population count instruction (POPCNT).

- Intel® Data Center GPU Max Series to develop and optimize epistasis detection.

The hotspot of epistasis detection searches is the generation of contingency tables. Each table represents the counts for all possible genetic configurations for a given combination of SNPs. Fast exhaustive methods encode the genetic information of given samples as vectors of binary data. This results in increased efficiency for memory storage, transfer, and computations. It also helps in enabling the construction of contingency tables by relying on fast bitwise AND and POPCNT instructions.

CARM Optimizations

The Intel Advisor CARM analysis feature was used to detect execution bottlenecks. It also provided useful hints regarding which optimizations to apply to fully exploit device capabilities. The guidelines provided by CARM were fundamental to achieving speedups of around 9x when compared to the baseline code.

Figure 1 depicts CARM plots for processing a dataset with 4096 SNPs and 16384 samples on a dual-socket node with Intel Xeon CPU Max 9480 processors, showing the Scalar (Fig. 1a) and Vector AVX-512 (Fig. 1b) performance upper-bounds of this platform by correlating the peak compute throughput (GINTOPS/s), bandwidth of DRAM memory and cache levels to arithmetic intensity (INTOP/Byte).

Figure 1. Optimization of epistasis detection searches in Intel Advisor CARM

Figure 1. Optimization of epistasis detection searches in Intel Advisor CARM

- The first implementation of the proposed algorithm, V1 (circle shape) in Figure 1a, employs bitwise AND and POPCNT to construct contingency tables by using three vectors to encode the SNP information. This implementation achieves a performance of 724 GINTOP/s, which is close to the L3 roofline. Following the CARM guidance, the potential bottlenecks of this kernel have been identified as caused by memory-bandwidth limitations (L3/DRAM) and/or underutilization of compute units (the next roofline to be intersected is the compute bound roofline).

- In V2 (square shape), the algorithm has been restructured to alleviate the memory bottlenecks. V2 uses two vectors per SNP (instead of three). The third genotype is reconstructed with simple ALU operations. In V2, these two vectors are split according to their phenotype, thus making the phenotype value implicitly known, which further reduces the memory storage requirements and operations. As can be observed, V2 achieves very similar performance in GINTOP/s when compared to V1 (V2: 723 / V1: 724), although the computational execution is almost 2× faster. This is because the number of reduced operations does not follow the reduction in time by the same factor.

- In V3 (triangle shape), we aimed to further alleviate memory bottlenecks by applying memory-tiling techniques. However, this did not yield any significant performance increase.

- In V4, the focus has been shifted towards overcoming compute-related constraints (as also initially suggested by CARM). Vectorization has been applied and the algorithm has been further restructured to support SIMD AVX-512 execution efficiently. As a result, V4 (diamond shape in Figure 1b) moves to the right, reaching higher arithmetic intensity and providing significant performance improvements. V4 achieves 3230 GINTOP/s (4.6× higher than V3).

With the help of the Intel Advisor’s Cache Aware Roofline Model, we achieved 8.9× faster execution compared to the baseline implementation (V1) for the dataset considered in this study.

4th Gen Intel® Xeon® CPU Max Series

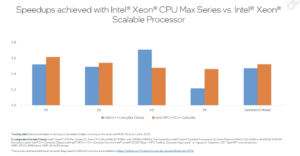

Figure 2 shows the speedup obtained1, with the code versions from V1 to V4, moving from a 3rd Gen Intel Xeon Scalable processor dual-socket platform (Intel Xeon Platinum 8360Y) with 36 cores per CPU to a dual-socket Intel Xeon CPU Max Series 9480 platform with 56 cores per CPU.

Figure 2. Speedups achieved with Intel® Xeon® CPU Max Series vs. Intel® Xeon® Scalable Processor

Figure 2. Speedups achieved with Intel® Xeon® CPU Max Series vs. Intel® Xeon® Scalable Processor

On average, the system with Xeon 9480 CPUs achieved a performance improvement of 47% compared to the system with Xeon 8360Y CPUs. Factoring out the increase in number of cores (Xeon 9480: 56 cores / Xeon 8360Y: 36 cores, per CPU) and differences in clock frequencies (Xeon 9480: 1.9GHz / Xeon 8360Y: 2.4GHz), the Intel® Xeon® CPU Max Series provides significant boost for epistasis detection performance, i.e., 20% increase per core/cycle.

Repeating the same experiments moving from the Intel® C++ Compiler Classic to the new Intel® oneAPI DPC++/C++ Compiler based on the open LLVM* standard and Clang* frontend resulted in even higher improvements (52% on full systems, 24%/core/cycle).

SYCL*-based Epistasis Detection on Intel GPUs

In one of our recent publications, we showed that the performance of SYCL* code is virtually identical to hand-tuned CUDA* code on NVIDIA GPUs while also achieving high performance on parallel devices from other vendors.

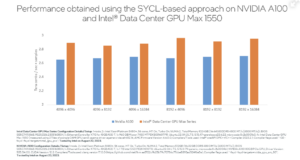

Furthermore, our most recent experiments have shown that execution of the SYCL implementation achieved performance on the Intel Data Center GPU Max Series that is competitive with that of high-end NVIDIA and AMD GPUs. As presented in Figure 3, the Intel GPU outperforms the NVIDIA A100* GPU across a wide selection of dataset dimensions (SNPs x Samples), achieving performance improvements of up to 14%using the same application configuration2.

Figure 3. Performance obtained using the SYCL-based approach on NVIDIA A100 and Intel® Data Center GPU Max 1550

Figure 3. Performance obtained using the SYCL-based approach on NVIDIA A100 and Intel® Data Center GPU Max 1550

Conclusions

This case study shows the importance of oneAPI tools, which not only helped in the development and optimization of state-of-the-art bioinformatics code, but also helped scaling the solution to other hardware. The presented results confirm that the Intel Xeon CPU Max Series delivers higher performance for epistasis detection than 3rd Gen Intel Xeon Scalable processors (which were the previously fastest CPUs for epistasis detection), and the Intel Data Center GPU Max Series outperforms NVIDIA A100 GPUs.

Moving forward, INESC will continue to optimize its specialized code base using oneAPI Toolkits to achieve higher performance.

Additional Resources

If you are interested in our research, you can look at our publications, including the source codes available, and other available online resources.

D. Marques, R. Campos, S. Santander-Jiménez, Z. Matveev, L. Sousa and A. Ilic. Unlocking Personalized Healthcare on Modern CPUs/GPUs: Three-way Gene Interaction Study. Paper presented in the 36th IEEE International Parallel & Distributed Processing Symposium (IPDPS) Lyon, France, 2022. DOI: 10.1109/IPDPS53621.2022.00023

R. Nobre, A. Ilic, S. Santander-Jiménez and L. Sousa. Fourth-Order Exhaustive Epistasis Detection for the xPU Era. Paper presented in the 50th International Conference on Parallel Processing (ICPP), Chicago, USA, 2021. DOI: 10.1145/3472456.3472509

D. Marques, A. Ilic, Z. A. Matveev, and L. Sousa. Application-driven Cache-aware Roofline Model. Future Generation Computer Systems 107 (2020): 257-273. DOI: 10.1016/j.future.2020.01.044

Ilic, F. Pratas, and L. Sousa. Cache-aware roofline model: Upgrading the loft. IEEE Computer Architecture Letters 13.1 (2013): 21-24. DOI: 10.1109/L-CA.2013.6

DevMesh entries for the oneAPI Great Cross-Architecture Challenge (two of the top five winners).

- Boosting epistasis detection on Intel CPU+GPU systems.

- Cross-architecture high-order exhaustive epistasis detection on CPU and GPU devices.

Get the Software

Add performance portability to your compute-intensive high-performance cross-architecture workload.

You can download and install Intel® oneAPI Base Toolkit as well as the standalone components used in our study at the following links: